Web services or report teams frequently have a need to display top N or last N rows from a table or group of joined tables, with some criteria applied.

Engineers commonly make the following mistake when designing queries and indexes to solve the top N query problem: they only create an index matching the leading predicate.

Common misconceptions:

- Order of the columns in index is not important

- When building an index we choose columns with good selectivity used as a query predicate.

- Select * from table where rownum <>

- Service has been tested and it works until customer with many records logs in.

How to identify problem:

If Top N is not implemented or if it is not implemented properly query can cause problems for application and for database. It usually can be recognized by increased customer latencies or high CPU utilization if all sessions keep reading same blocks or High I/O when each request asks for new data:

- Latency

- High user CPU

- High I/O

Incorrect implementation:

Order of parsing is WHERE, ROWNUM, ORDER BY. Data will be read and then sorted.

Correct inefficient:

We pull ORDER BY to inner query and rownum to outer. Now we get correct data but it is not efficient. Index is built on predicate which is not good enough for Top N.

To have efficient query we have to build an index on WHERE clause column and ORDER BY column. Now query will be able to walk the index and stop when increment reaches 5. Therefore STOPKEY can work. How do we know: there is not ORDER BY STOPKEY.

So now that we have learned how to use STOPKEY and how to write an efficient Top N query, let's see how we can do it with pagination.

First what we can't do. We can't start counting from lower limit.

We have to get all the data and then filter bottom. Now when we know how to write pagination correctly we understand that we can't use this to paginate through all data because every time we read them all and filter up to lower limit.

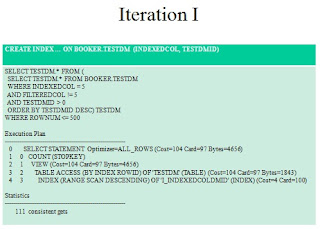

We have iterate. How can we use Top N (STOPKEY) to achieve this? By remembering where we stopped last time and include that unique value in our index. So index now is predicates, unique value and ORDER BY. Since you do want to iterate through all data then usually you can ORDER BY on same unique value you will use to remember where you finished in last iteration.

So just remember last value and pass it again. You will not see "sort order by" STOPKEY. Also as you iterate trough data number of consistent gets will stay same. - That is what we want.

Now we will have more examples with different requirements. So what if we have predicate that requires range scan that is not in sync with order by column. Index is not sorted the way we want because first column has multiple values and it sorted on that column, while we need data to be sorted on second column.

Now we will have more examples with different requirements. So what if we have predicate that requires range scan that is not in sync with order by column. Index is not sorted the way we want because first column has multiple values and it sorted on that column, while we need data to be sorted on second column.If we have multiple tables, ORDER BY must be in sync with order of data query will read. As long as we can follow first index data, step to second index follow that index data, step to third index follow that index data and go back. If all of this matches the ORDER BY clause then STOPKEY will work.

So finally how do we know it works?

Good execution plan:

Following must match:

- Combination of lead predicate columns and all order by columns

- All index columns

- Both are sorted in same direction

Quick check. If your Execution Plan has following step your implementation is incorrect:

SORT ORDER BY STOPKEY